Tuesday, 25 August 2009

Cloud - Likely to bite you on the arse

Thursday, 20 August 2009

Tech Review - PHD Virtual esXpress

Company Background

PHD Technologies was formed in 2002 and they are based out of Mount Alington New Jersey, USA. They have well over 1600 customers and this customer base includes big enterprises such as Siemens, Barnes and Noble, Tyco and are extremely popular and big in the SMB Space and the Academia space.

Technology Background

PHD provides organisations with a cost effective and robust solid backup tool, esXpress is now in its 3rd generation and has been designed to combat the problem of backup in Virtualised estates since the ESX 2.x days. Now in the 3rd Generation release esXpress provides core functionality and technological advances that give PHD a leading edge against other players in the space and allows them to compete for Enterprise custom due to the extensive technical offerings.

PHD utilise VBA (Virtual Backup Appliances) to perform backup and restore from either a connected RDM Disk to your Virtual ESX Hosts, an NFS Share or even backup to a VMDK file. VBA's are something PHD has architecturally used within its product set since 2006, they enable backups to be performed with the following benefits for your Virtualised estates:

- Negates the Need for VCB agents/proxys so Esxpress can be used to remove the need for agent level backup on VM's hosted on entry level ESX license versions

- Removes the need for backup agents within VM's or Using VCB meaning you can virtualise more due to reduction in Host overhead.

- In current environments using VCB it reduces the need for a VCB Proxy server thus reducing cost of this server and any SAN requirements

- Each backup VBA can perform 16 concurrent jobs, competitors max at 8 (more detail on enhancements later)

- VBA's are completely fault tolerant in that they are VMotionable to another Host in the possible event of a disaster striking VBA's can be installed extremely quickly with the OVF import functionality.

Esxpress 3.6 benefits

Vsphere 4 Support

3.6 provides full support for this and also has a VC Plugin which is supported on the vCenter client. Additional admin is performed either via a Web interface which provides you with the ability to manage the backup environment from any desktop and not have to worry about having to install GUI's wherever you go or want to check and manage backups.

Data Deduplication

Massive reductions in backup space requirements can be acheived by using this feature. With VM's being backed up with VCB using conventional backup products like Backup Exec or even Netbackup performing inline dedupe operations is not capable natively by the underlying Backup software, you need an appliance or piece of additional software to do that at additional cost. esXPress 3.6 Provide Data deduplication inline completely free of charge within your license entitlement. Dedupe in esXpress is claimed to provide upto a dedupe ratio of 25:1.

The real magic in esXpress happens on what PHD call there Dedupe appliance, this dedupe appliance is built using the PHD San appliance which is available to download additionally at http://www.phdvirtual.com/products/virtualization-utilities, this appliance can provide you with the option of using shared VMFS across Local DAS storage on your ESX hosts without buying a full backend SAN. When using the Dedupe appliance and backup method incremental VM restoration is also possible for deduplicated backup.

EsXpress Deduplication technology also means you can backup and restore any delta changes across your VM's, this is extremely beneficial for remote located VM's, the delta block change capability means that you can exploit use of the WAN to backup across sites to reap the benefits of a complete centralised backup strategy, using a centralised backup stategy provides you with benefits such as reduced manpower overheads to change backup media and monitor jobs remotely, improved security of having data stored offsite, reduction in possible Tape vaulting costs and many other benefits pertinent to your organisation when removing remote infrastructure.

Improved restores and backup streaming

In my opinion restores are the most important aspect to consider for of any backup technology, it is no good backing up quickly if the restore activity is long and increases your RTO time. File level restores in VCB Virtual backup software is never quick, mainly due to you having to mount the VMDK and then extract the file, for a 15-20GB file this can take considerable time. Esxpress 3.6 has improved multi user point and click restore functionality from within the esXpress java GUI, the extraction is made possible by unique executable file format and not a TAR similar file.

Total backups per host is always a limiting factor in virtual backup architectures, VMware VCB has a recommendation (setup dependant) of 4-6 concurrent jobs per ESX Host, esXpress 3.6 now can allow backup of a total of 16 concurrent jobs per ESX host, meaning shorter backup windows.

Product Summary

ESXpress is a great backup tool and touts some great niche technical features, this is most certainly a good product to use in the SMB space due to the lack of requirement to use VCB and can be used within the larger virtualisation estates with the concurrency and deduplication benefits.

I think the most attractive component in esXpress is its Dedupe capability and the fact you can exploit a side benefit of this in remote backup across your WAN, centralised backup is becoming a very popular strategy in virtualised estates.

Check out esXpress at its website http://www.phdvirtual.com and a full 30 day demo available on http://phdvirtual.com/products/esxpress-virtual-backup. For any Vmworld attendees they are also on the exhibitors stand #1502 at Vmworld 2009 in San Francisco http://www.vmworld.com/community/exhibitors/phdvirtual/ so check them out and pass by the stand.

Sunday, 16 August 2009

As if by cloud magic...

I have had all week to digest the views and opinions from various Industry analysts and bloggers and see what they are thinking and saying in general on the Spring acquisition and now heres my attempt (ARSE COVER DISCLAIMER - I am not a Software Architect/coder/guru/white sandals & sock wearer so excuse any rubbish) at trying to predict where the purchase will lead VMware's current business model and what will evole from the acquisition, lastly I also highlight what the industry needs from any of Vmware's clouds offerings.

VMware - Now not just a virtualisation company?

VMware are now kick starting themselves into providing multi tiered service offerings, and with the latest acquisition are slowly creeping up the layers and stack past just the underlying Datacentre Virtualisation technology. This natural growth is mainly I feel due to demand from the industry and current customers for more agile cloud based services and coverage across more of the datacentre.

The Springsource acquisition enables Vmware to move into the PaaS (Platform as a Service) market, this effectively means that they can provide organisations with an end to end operational stack starting from the underlying Virtual Machine workload, through to the presentation layer for orgs to run JVM type workloads and webservices. With this solid framework VMware can provide presentation layer building blocks alongside technology such as vApp and they go further than providing just the solid underlying platform infrastructure which is present within the VDC-OS intiative.

What does this mean for an Infrastructure bod?

Some people maybe asking themselves (like me) "Well VMware have bought these guys what does that mean to me and my current investments in VMware technology". From my observation the Spring acquisition isnt going to be a tactical point purchase like Propero, Beehive and Dunes, I see Spring being the key driver for Vmware to start to be able to provide a sustainable reputable driven cloud based offering for current enterprise based customers and for new potential customers that would be considering Azure and Google Appengine. Looking at available hooks within Spring and by the Spring.NET and I presume you will even extend and be able to use the app components available upon Microsoft Azure services which is a very smart move if this happens, this will mean you will have the flexibility to run certain components on alternative clouds and not be locked into using the complete end to end VDC-OS stack.

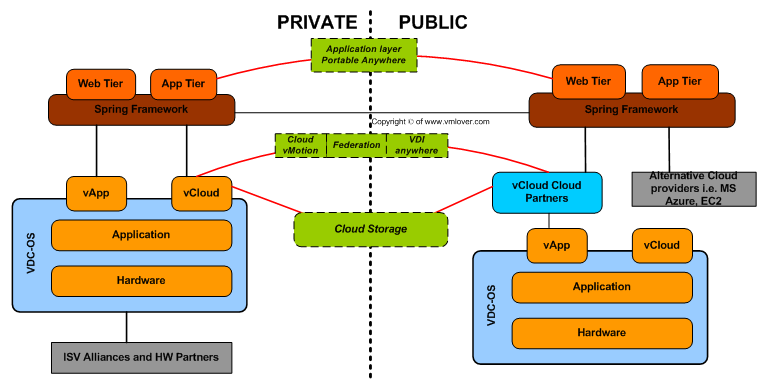

Below is a self compiled diagram which includes my vision and picture of what architecture within the VMware suite may start to look like, you might think its a load of rubbish and if you do then please pass comment, however I feel we will have an emergence and movement now into Cloud being accepted more and more due to the capability of VMware having a open framework and the capability to host the application being hosted anywhere with the massive scalability options such as being able to burst workload on demand for more required capacity and utilise and offer your organisation standard machine building blocks with vApp and Virtual appliances.

The future vision

The future vision- vApps and Virtual Appliances starting to grow further with Spring and its inherent available turnkey middleware and webservice building block stacks, and with the underlying Vsphere Platform being available this complete end to end stack can now be turned into a moveable and flexible workload across Private and Public cloud infrastructure, with that I would like to see live migration capability between Clouds and certainly more portability for external cloud usage. Private clouds can be hosted internally via vCloud api's, an example could be a company like SAP that would be hosting SAP instances privately but that hosted workload is not limited to a firewall or boundary between each of them,

- Federated security between Clouds for any running workloads and applications regardless of what datacentre or cloud hosting partner the platforms and applications sit on. I want ease for security and ease to move to cloud providers when I want without penalty or obstacle,

- Virtual Desktop will evolve into revolving around less of a hosted OS centrally and more of a application orientated strategy, with the applications being hosted upon any cloud and accessible via any browser or OS, similar to how Adobe AIR apps work,

- Burst capability With intuitive interaction between app presentation and the core VDC-OS I would hope we see more automated orchestration activity in the event for example that you need to increase and burst to more workload. By using vApp you can essentially acheive this with pre automated configurations and knowledge available as to any external dependancy within the metadata such as a Database server that could become constrained if more app stack workload was added etc.

I am going to go over some of my predicted possibles in more detail in future posts once the dust settles with the Spring acquisition but one of the prime benefit that I can see to this is the possibility that we may start to shift into VMware building defacto affordable Middle/Web stacks just as Redhat do with JBoss. This may additional mean that we will move away from the performance and tuning arguments and issues of running certainly Java workloads within VMware machines, we move away from the issues of ISV compatibility and also away from the expensive licensing infringements to use DRS and Multiple hosts for our benefit that the likes of Oracle impose.

Summary

Well thats enough babbling and digressing for one post, hopefully I have raised some possible avenues that will arise for organisations on yet another acquisition that VMware have made. This one is certainly an eye opener and it is going to be very interesting to see what the vibe is like at VMworld 2009 on what direction VMware are now moving into, and lastly it will be very interesting to see in 6-12 months time how VMware shape as a company with such a diverse acquisition.

Wednesday, 5 August 2009

Azure - Microsoft's new baby

Looking at how Hyper-V has performed within the analyst popularity stakes since its release I don't think market share is still any better for Microsoft, analysts are saying that only really Citrix Xenserver is the competitor with single percentage gains on market share on Vmware and if anything Uncle Larry at Oracle is likely to succeed in gaining more uptake on Oracle VM due to the Sun acquisition finally closing.

My view (if you want it) on Microsoft's strategy for Hyper-V is that they are now shifting concentration to the Azure Cloud platform and not the underlying Datacentre server virtualisation platform. My justification for this is that in all reality to them Cloud is seen as almost a software layer and an extension of services within your datacentre today such as Exchange and SQL. Azure being hosted within Microsoft's datacentre will no doubt rely on Hyper-V but to be honest it is the interface to components that will be where Microsoft concentrate on exploiting, they are not in the game to make money from a Hypervisor this is why it is an inclusive product to Windows 2008.

Microsoft would never be taken seriously running capacity planner exercises and on engagements to work with you to configure optimal infrastructure platforms to gain large consolidation ratios, and I feel neither will Gold Certified MS partners (most are VMware resellers anyway). Instead I feel Microsoft will stick to what they know best and have the developer teams internally being capable to run such a beast within the application arena, this then provides them with the ability to kill two birds with one stone and concentrate on similar strategy to Google whilst gaining foot hold in the Cloud service space.

There is speculative rumour that the domain www.office.com has been bought and registered for a new online version of Office probably arriving at release time that 2010 Office arrives, also MS has Exchange 2010 on the roadmap for next year which is going to be tailored for Cloud environments so if at least anything else, moving focus in the Hypervisor arms race with Hyper-V and flogging a possible dead horse would have the potential negative impact of losing ground on Mr Page and Brin. Microsoft will no doubt spend less development money on Azure than designing Hypervisor related technology, most of the development I expect will come from development blueprinting done on things such as Live Services, MOSS and Collaboration tools such as MSN messenger. And you don't necessarily need server virtualisation capability to run a cloud, you can provide ASP like services with just a fully optimised application stack, this is something that Microsoft has a better chance at providing with current portfolio offerings such as MOSS and Exchange and future technology on the horizon meaning no need to focus on the underlying platform.

Cloud Computing still has a rather large volume of unanswered questions and it is still very much a bleeding edge stage for the technology, it is clear though that even the likes of VMware are not focusing on the Hypervisor platform as much and are having to diversify and concentrate efforts on the bigger picture of a cloud environment with vCloud and other core components within the whole VDC-OS. So by following this strategy of being mostly a application cloud provider means that Microsoft isn't seen as the conquer all vendor by taking on the Hypervisor market, it means they are classed as a fluffy software provider still but are able to keep on track in competing with the likes of Google. It also means they can resell services through partners and hosting companies and still ensure that software partners such as the likes of Quest will still be able to use the API that they offer to provide niche partner software beneficial to both parties.

I will find it interesting to see if my predictions come true, if anything its worth a stab at guessing as in today's fast pace world of Cloud it can quickly be blown away by the wind in minutes :)

Sunday, 2 August 2009

Virtualisation within today's IT Frameworks

The higher level issues

Today we have mature IT Libary's and frameworks such as ITIL, MOF and many others which have over the years focused on building operational and technical processes that you can tailor to operate a streamlined Infrastructure (in theory). Libary's like ITIL had to start with a baseline somewhere, but with technology growth this is however something that needs to continuously evolve to changing fads and paradigms. Examples of this include the fact that 3-4 years ago hardly anyone virtualised servers like they do today, nobody ever heard of the Cloud let alone looked at using it, nobody performed Outsourcing as rediculously as they try to do today and we certainly were not under the same financial constraints that we all face due to the credit crunch.

So enter to market at about 2004/5 enterprise server virtualisation into this arena, with the technology having one of the biggest impacts to IT since the actual physical servers it has consolidated, and having many many technological advances which have fully matured 5 years later and is now mature enough to suggest it will be here for many moons to come. However even with the dramatic impact it has had it still appears that IT Frameworks still lag in providing any focus on how Virtualisation technology changes process within datacentre ops. When I google "ITIL and Virtualisation" I get very little to suggest I'm wrong.

Back at the ranch and in the datacentre and with new technology or not the op processes still need to be followed to keep the ship running, Architects and operations guys that know Virtualisation inside out and have seen it mature to the stage that it is at today want it desperately to change to make sure that they are continuously are not drowned in legacy process which limits potential of the core technology they believe in. It isnt just going to be Virtualisation that suffers, add game breaking technologies into this arguement such as Cisco UCS and EMC Symmetrix VMAX that reduce even more reduction of process in the management and operation of the datacentre and this is going to become more and more of a pain in the arse to you and me.

Virtualisation and the underlying technology that supports the virtual landscape is deployed as a point solution, this is also the case for the example technologies that are changing the shape of datacentres, you procure them and you can solve common datacentre problems. Virtualisation ecosystems as in the software and components that you can deploy however can reduce your process by default and with very little need to implement any fandangle add on or interfaces, however you still need to ensure that this potential is exploited correctly.

Any problem areas of a datacentre that have been remediated by Virtualisation had various processes and framework activity previously structured around them which was expensively designed for them when the infrastructure was placed within the Physical world. For an analogy of this the Physical world is rather much the equivilant of turning an oil tanker around in the Panama canal, instead now you have replaced the oil tanker with virtualisation technology that now means everything has the potential to move at 1000MPH, meaning now instead of the tanker you are turning around a hovercraft in the Panama canal. This speed and agility however can be a double edged sword, it now means your process certainly has to be a lot more streamlined and lean and you need to be on top of defining processes for any operational activity alongside any IT service management teams.

An example of differences in contrast is within Vsphere and that you now have the possibility of features such as Virtual Machine server resource upgrades being possible on the fly with Hot Add of CPU/RAM all via the gui with a soft change. Question is does this type of thing require a full attention of the CAB? Surely it is low risk, it doesn't require an outage, it dosen't go wrong (yet). Other examples include run book reductions in the event of a DR scenario, by using something like Vmware SRM to replicate VM's negates this, previous it would need probably half a department of people and a runbook as long as your arm to intiate even a reduced functionality test.

- Release Management - Includes covering how the day to day service automation and features such as Vmotion work first, then work onto working with them to define where you can reshape other process flows for say a change mechanism for upgrading your virtual resources or deploying various new VM's in an automated script.

- Root Cause Analysis/Incident management - Explain that vSphere can offer a full blown visable internal and extensible monitoring repositary to see what has changed and when, this can show them that you can remove the need for witchhunts on RCA procedures. It's also extensible into current service management tools such as BMC remedy, Veeam Nworks with HPOV and MS SCOM.

- Change Management - Cover things such as how Vmotioning means that you do not need to turn off VM's to power down the ESX host, the VM's can be migrated, and explain how other technology such as Storage Vmotion can even provide reduction in outages on your SAN.

- Capacity Management - Explain Resource Pools and the ESX Scheduler, this doesn't need to be to a VCP understanding, keep to a basic overview that Virtual Machines can be rate limited or provided with reservations in resource pools.

- Release management - Explain you can perform activity such as a snapshot before any change is made on a VM for instant rollback and service dependant you may negate the need for a CAB approval by the change being deemed low risk. Also explain you can negate potential bottle necks by P2V'ing machines with technology such as Vmware Appspeed.

- Continuity Management - Explain the runbook can be completely disolved with technology for DR such as SRM, Also explain you won't need to organise outages during bank holiday weekends when you should all be enjoying a pint down the pub!

These are all example areas to kickstart your approach, I could write hundreds of gamechangers which evolve from technological advances. Like any new technology the important thing is to ensure that you view the processes you need to transform from a different angle and viewpoint, the people responsible for managing the operational processes will 9 times out of 10 probably want to emulate what they do today "because it works and thats how we have always done it" thats rubbish I Say, ensue you make sure you educate and ensure that the newer technology you are designing is effectively not just being put in as project process exercise and you actually get the best TCO out of the technology.

The Future and media methods

Looking at how we can solve the problem and I do beleive we will always be constrained on keeping processes upto date with new Technology trends until we can change how IT moguls set goverend criteria for being adopted into a formal Libary.

New technology mediums with things such as Wiki's and Blogs may emerge into actually being the methodology medium, subject to obvious approval by relevant bodies this is something that I beleive will become more and more accepted by the pragmatists. When you look at how the Encyclopedia has evolved it went from book to Encarta type resource through to mostly where we are today with the Internet with Wiki's so the next logical step has to be moving from classroom based environments into online web 2.0 experiences for realtime updates with trends.

Saturday, 1 August 2009

vSphere Service Console and Partitioning

Previous versions of ESX held the /Boot within the RHEL formatted disk partition's. Vsphere differs, it now has the Service Console stored within a VMDK which can be either held on a local VMFS partition or from a Shared Storage VMFS LUN. Some of the advantages to this include;

- Being able to perform a snapshot the VM and have the opportunity to rollback if ever needed after upgrading/updating due to issues on the changes

- Maybe to remove the way the COS being tied to CPU0 as it is in ESX 3.x, with it being a VM it can be scheduled i presumed on any of your zillion cores.

- Probably means being able to provide dedicated resource throttling for the VM running the actual VM so it doesn't run away with the whole of a single CPU resource

From my current observations when performing a manual install of Vsphere from DVD/ISO it formats under a extended partition all of the complete spare disk space with VMFS to cater for the Service Console VMDK partition.

The Problem

So why is this a problem? In most environments it wont be, however If you want to ensure you have freedom to partition and to keep the Service Console on a seperate VMFS partition away from VM's on a local VMFS volume then it will not be possible by manual install.

The Solution

The only way I have been able to acheive creating manual partitions is with building a customised Kickstart Configuration File. Vsphere again appears to have changed on this front, in previous versions you created a KS by downloading from the ESX web sites, again a difference in Vsphere is the easiest way I found of building a KS file was to build the ESX host from CD first and then grab the KS.CFG file from /Root, I then edited this file with the relevant parameters for storage with the following;

part 'none' --fstype=vmkcore --size=110 --onfirstdisk

part 'Storage1' --fstype=vmfs3 --size=15000 --onfirstdiskvirtualdisk 'esxconsole' --size=7804 --onvmfs='Storage1' part 'swap' --fstype=swap --size=800 --onvirtualdisk='esxconsole'

part '/var/log' --fstype=ext3 --size=2000 --onvirtualdisk='esxconsole'part '/' --fstype=ext3 --size=5000 --grow -onvirtualdisk='esxconsole'

Word of advice in any of the preincarnations of ESX it was always best practice when building ESX to either physically disconnect your connectivity to shared storage on an ESX OR remove any zoning to the SAN. This again is something I strongly recommend you do, if you don't you may hose a complete VMFS lun.

Hope this helps and anyone from VMware can shed some of the benefits of using a VMDK for the Service Console now are set aside the obvious ones.

Subscribe to Comments [Atom]