Sunday, 28 June 2009

The Virtual Glue

Available today is latest beta release of VMware Studio 2.0 beta which enables you to create pre compiled Virtual Appliances, you can then create them as OVF's and also add these appliances into a new form of grouping applications in vSphere called vApps. With VMware studio offerings you can compile your own internal wrapped appliances for your own Virtualised environment and is not exclusively for ISV's, to show this it even does Microsoft in the 2.0 release.

I recommend you check out full detail and recorded demos at the official vmware site on http://tinyurl.com/n7lv7l . The benefits of VM Studio and using vApps are huge, it is certainly going to be the enabler for future strategy of application delivery in completely virtualised environments and something I will be looking into in more detail.

Culture change

By deploying turnkey Virtual Appliances with for example a new SAP ERP Landscape on 10-20 Machines in a period of say 2-3 days rather than 2-3 Machines in 10-20 days clearly shows that current long deployment processes are redced and less people need to be involved on a deployment when using Virtual Appliances alongside side orchestration and groupings such as vApp. The problem however with this is you get people protective on roles and the oldage turf war developing so it is not something that can be just implemented straight away.

I recommend you check out full detail and recorded demos at the official vmware site on http://tinyurl.com/n7lv7l . The benefits of VM Studio and using vApps are huge, it is certainly going to be the enabler for future strategy of application delivery in completely virtualised environments and something I will be looking into in more detail.

The release of VMware studio got me thinking about the state of the whole current Virtual Appliance scene, one of the questions that I asked myself when looking at the presentation was;

Virtual Appliances's, shouldn't they be mainstream now?

Virtual Appliances (VA's) are at some point going to certainly become more and more present within Virtualised datacentres but the main question around this is when. Mendel Rosenblum the original Virtualisation visionary and founder, intially promoted the whole Virtual appliance trend by quoting in 2007 that Virtual appliances would be mainstream and adopted heavily by external ISV's to wrap COTS applications, he also stated that this would also replace the OS as we know it and be almost baremetal to the Hypervisor.

Highlighted value proposition included;

- Removal of the OS in the stack, replacing MS Windows with JeOS/busybox type OS's to run core services

- Application deployment turnkey capability of say a whole CRM landscape into one OVF file, this would include multiple VM's within

- Sending OVF content and updates to the customer by a dynamic delivery process via the internet/cloud or shipping on DVD etc directly to the customer

- Appliances and application would be tuned by the ISV and not the internal application or IT ops team, this removes any burden incurred on configuration.

- Licensing is much easier, you throw this burden to the ISV to manage, this is the same for product updates too, they deliver these dynamically.

All benefits of pre packaged VA's ultimately means that any work and scope of activity that is undertaken pre project phase today is heavily reduced in scope, even the system sizing is a breeze as the ISV does this and tunes it to the volume of users physically possible on that relevant appliance. This is no different to networking/security appliances that exist in datacentres today, they are built with a BSD/Linux variant and finely tuned to a maximum amount of theoretical user base.

Contrary to all of the benefits of Virtual appliances being quoted by the visionaries we are still in 2009 yet to see vast arrays of Virtual Appliance offerings from the big vendors such as SAP, HP and Oracle. To date I am only aware of one example that has had high amounts of advertisement and was targeted at organisations was the BEA Weblogic LiquidVM, this consequently with the Oracle merger appeared to get quashed.

So Why the lack of mainstream adoption of VA's into virtualised datacentres? A few things I believe that maybe the reason and possible barriers of adoption are;

The R word...Responsibility!

Ultimately large amounts of responsibility needs to be taken onboard by Internal Virtualisation teams to promote the art of the possible with VA's and to educate on what benefits they provide as apposed to current methodologies. Development tools such as the latest release in VMware Studio can also enable internal teams to build wrapped custom builds for internal applications and take away the large amount of work of deploying components in the VM. The barrier of adoption here is that there is limited amount of mileage in promoting VA's and custom packages unless business application teams and other parties involved in a typical deployment are able to share pre deployment options on there side of the story for the relevant application stack.

Responsibility also lies with ISV's to educate in a sideways fashion any customers on Virtual Appliances. Your App bods probably meet on a more regular basis with ISVs than you think and do what the Infrastructure peeps do on typical engagements with hardware suppliers such as the gaining regular technology updates, presentations and roadmaps on latest products, I am sure the ISV does educate or have the odd slide on Virtual Appliances (if your lucky) but more interaction between app bods and infrastructure bods is needed when this is known to allow you to use this technology.

It is clear that the future datacentre whether mainframe or x86 will be fully virtualised so therefore its time for ISV's to start aligning their strategy, ultimately it may mean more revenue certainly if the appliances can be delivered dynamically across the internet and be dropped straight into the customers virtualised farm.

Culture change

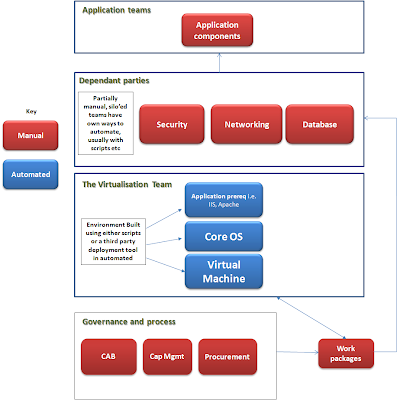

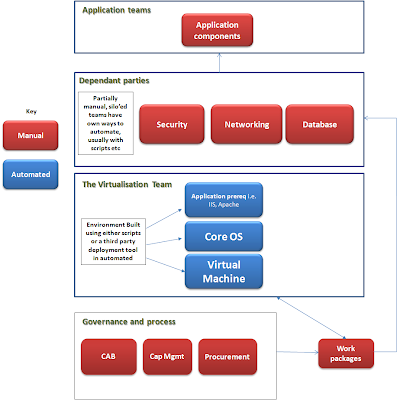

As you can see from my pathetic attempt at a rough process flow diagram (excuse the poor resolution) below I show a rough guide of typical people and processes involved on the delivery of applications, also shown are the areas that are manually performed deployment tasks against automated tasks. It is clear to see multiple people are involved in the average deployment, the role call in many corps include change teams, test teams, project managers, procurement, architects, IT ops and many more bods.

By deploying turnkey Virtual Appliances with for example a new SAP ERP Landscape on 10-20 Machines in a period of say 2-3 days rather than 2-3 Machines in 10-20 days clearly shows that current long deployment processes are redced and less people need to be involved on a deployment when using Virtual Appliances alongside side orchestration and groupings such as vApp. The problem however with this is you get people protective on roles and the oldage turf war developing so it is not something that can be just implemented straight away.

Evangelising about the agility that VA's provide within Virtual appliances is definitely something that can aid the adoption of Virtual Appliances. For education purposes it is recommended to start on showing the quick win examples such as LAMP Stacks, this will get end users aware of what can be packaged within a VA, it will show that VA's are nothing out of the unusual and they will certainly become ok with the fact that its not all bad once seeing a basic example that they are familiar with in typical VM's. Once confidence has grown move onto cut down appliance OS's such as JeOS...its just a matter of breaking the barrier down.

vApps functionality in vSphere enables organisations to reduce even more manual and people process. It enables you to compile the complete application stack and configure all associated components by one single master definition. Tie this in with some VMware orchestration capability where you could say deploy via API the Database components as part of the VM build and provision the relevant Networking components and it is clear the potential is going to be huge.

ISV's

It is going to be quite a while before current licensing models that ISV's offer and what companies signed up for in 4-5 year agreements are moved and migrated into licensing plans tailored for Virtual Appliances. Also at the moment very few organisations are likely deploying new application landscapes due to the economic climate. For those who have apps that do have VA alternatives there is naturally a transformation project cost associated with this to move the application.

Also important to highlight is the reseller chain that large ISV's use to distribute software would most likely be redundant. By deploying applications dynamically from centralised HQ's would almost remove any reseller and distribution partnerships.

Ye olde IT engineer

Something also which maybe a reason for lack of adoption is this guy/gal, they like to deploy a full SOE and to customise according to the application requirement, they also like the fact that they can administer that server and the relevant OS nuts and bolts such as running processes and services, also they like the fact it can be monitored and looked at if things go Pete Tong (wrong).

Take this role away from the average IT ops guy and run bare minimal Appliances and its quite easy to see how you can be disillusioned and disgruntled by changing how things are done today.

Summary

Hopefully this post gave some hindsight into what barriers you may experience and how to overcome them. Massive amounts of potential exists in Virtual Appliances and what VMware studio can do. The vApp potential is rather large and I will hopefully write a post on this when I get time to have a look in more detail at the beta of vmware studio.

Sunday, 21 June 2009

So the Frankenstein Hypervisor begins!

As expected after the recent acquisition of Virtual Iron Oracle have wasted no time in slashing any future Virtual Iron technical development, check out http://www.theregister.co.uk/2009/06/19/oracle_kills_virtual_iron/ for full gory details of the bloodbath.

The Virtual Iron brand as we know it has also been slashed as a procurable product meaning that any current customers are now hung out to dry and they have to either transition across to the new frankenstein hypervisor of Oracle VM or seek an alternative product. The recent news from Oracle also means that any partners that resell the software will lose out and have this cut from any technology offerings and they will most likely be smaller resellers due to Virtual Iron not being as mainstream and feature rich as market leaders meaning it will hit there sales revenue. Oracle have stated support for Virtual Iron customers will be a lifetime offering (not know what charge though). This support can only be of any use to current customers for only 6-12 months due to it being difficult to virtualise newer applications due to ISV's classing the hypervisor platform as legacy.

Bolt on neck

Oracle have claimed that Virtual Iron technology will be integrated into the Oracle VM, being the skeptic that I am I seriously doubt that we will see much transition of Virtual Iron technology into Oracle VM other than quick wins such as live migrate and a few other current de facto standards for a Hypervisor product. The only statement that may prove this to be total tosh is that they also quoted that they preferred Virtual iron to the xVM Sun offering which I find interesting and will post another on why I think xVM is a better platform at some point in the future. Transition and development of VI into OVM is likely to be quite a while and I expect we are talking yearly time frames rather than periods of months for the change to occur.

One thing this announcement shows is how keen Oracle is to get into the Hypervisor business mainstream, in comparison (and I know its not finalised yet) they have revealed very little planning activity officially on what will happen out of the SUN acquisition, so for them to completely cut off any growth spurt of Virtual Iron and send the message it is becoming part of OVM shows they want to focus on beefing up the Oracle VM platform and get the rubber hitting the road.

Time to capitalise

Various people think this is the right time for VMware, Citrix or Microsoft to gain some more market share of current Virtual Iron customers and I agree with this, lets face it any customers who want to expand current Virtual Iron estates will be faced with only the Oracle VM technology for future roadmaps, some may be coming up to maintenance renewals and considering whether they need to grow up and get into a Hypervisor that is sustainable and capable of virtualising any workload such as vSphere, vSphere also has some great low end product offerings that can get smaller companies onto the Vmware ladder.

Customers who are looking to go elsewhere for a datacenter virtualisation platform will need to know that some of the following may arise;

- OVM may continue to have a Lack of partners and maturity level in datacentres unless it grows up fast. For over Three years Vmware has worked heavily with hardware and software partners to ensure that high performance levels can be achieved with the various infrastructure components hosting the virtualised environment and the applications that run on top of the virtualised infrastructure,

- I predict longer term OVM is likely to be more expensive (come on we all know its the Oracle way) and current extended support maintenance with VIron will rocket to false people onto OVM,

- There maybe a lack of extensive management capability that is currently on offer today with alternatives such as vSphere, and competitors that are slowly behind VMware,

- Oracle VM may lack within the new OVM the offer of granular licensing plans and levels, this is where VMware is currently very strong and dominant.

- Performance maybe weak...come on look at results coming from vSphere http://virtualgeek.typepad.com/virtual_geek/2009/05/integrated-vsphere-enterprise-workloads-all-together-at-scale.html

Summary

Evidence is here to suggest Oracle want to propel themselves into the Virtualisation industry and they are not hanging about, this will hopefully mean good news from a licensing perspective for both the users of Oracle technology that want to virtualise if they run OVM. This may also remove a barrier currently in place by Oracle and allow users of other hypervisor's to also be able to virtualise, this maybe possible as Oracle gain more "happiness" that they are gaining revenue with popularity of OVM and lacks the "rules" to virtualise current apps and DB platforms.

For you and me this also means that at least VMware (and Citrix at a push) are kept on their toes and can take note that eventually they will have another Virtualisation vendor who is going to be biting at their heels for market share in what is going to be a more predominant form than Oracle VM today.

Tuesday, 16 June 2009

vSphere - The littlest things make a difference

With the recent arrival of a DL380 G6 to test vSphere I have been able to see some of the extended features and also some available product functionality unleashed with the new Xeon 5500 Nehalem Chipset and its associated CPU extensions such as VT-d, VT-c etc. Some of the nice features l've noticed so far include;

VM Memory Options

VM Memory Options

Rescanning for Datastores

Dan

Storage Views (Inventory > Inventory > Datastores > Select your DS > Right click on sort tabs)

This is real usefull especially when it comes to SWAP consumption and if you are operating with multiple LUN's and have multiple sized VM's that you have in the past tended to use rule of thumb sizing to calculate on each LUN what is needed to be reserved. New extended vCenter views enables you to visably see the amount of space consumed by the memory swap to disk.

VM Memory Options

VM Memory OptionsI've always like AMD64 chipsets, they have always used NUMA, Intel originally on the other hand didnt, they decided to go with a North Bridge for RAM accessibility to CPU.

Intel with Core i7 (Nehalem) now has NUMA and this is definately going to mean better things for running Virtualised workloads on Intel chipsets. I've noticed the "NUMA memory affinity" option below to assign memory nodes to VM's in turn which I presume offers improved performance enhancements for VM's that would benefit by using certain dedicated memory banks rather than accessing memory on both.

Interested to know if anyone has used this with Vmotion and whether vmotioning to a Host that dosnt have NUMA i.e Xeon 7400 is allowed (I predict not).

Rescanning for Datastores

This is a real nice one, it enables mass rescan of new datastores which in ESX 3.x was a pig, you had to do it on each host. This now takes it up the heirachy, I expect this would be usefull when provisioning a new host or using in DR scenarios.

I'll feedback in the next week (I do have a day job you know) with some new and beneficial options, time to test newer features like FT and VMdirectpath I/O.

Dan

Tuesday, 9 June 2009

A dark dark cloud

Just catching up with blogs/news and noticed that this event happened this week http://www.theregister.co.uk/2009/06/08/webhost_attack/ the geezer who ran the company also took his own life http://timesofindia.indiatimes.com/Bangalore/Techie-hangs-himself-in-HSR-Layout-/articleshow/4633101.cms So a very confusing story mixed within the technical catastrophe that occurred.

More on the talking tech side and this event really is a stark warning of the potential destruction that a self service provided administrative interface for "cloud" services can wreak to end users, this particular provider was easily exploited due to technology insecurities in the HyperVM product giving exploiters extended full root access to delete practically anything public facing.

Ok so this hyperVM app was insecure but how many other shops enable root because they are lazy? Who remembers when we used to have root enabled by default in ESX 2.x???? I wonder how many other apps that are developed in what is effectively still the early adopter era for Cloud are being developed with very little security governance and certified hardening process (I'm not a developer so excuse the possible lack of knowledge here).

This news piece has also provided a warning that public Cloud services and the current ecosystem of management interfaces in its current bleeding edge form is still very raw and rough around the edges, it certainly highlights cloud services are susceptible to destruction on this scale by the security flaws possibly found in any interfaces that manage "cloud" datacentres.

I guess the question is would this type of exploit occured in a Datacentre which was physically secured and more conventional to today i.e. a Private Cloud? I think not, the security model is more aligned to current conventional security policies, you are not putting security in the hands of your service provider as much and you are most likely using a proprietary management interface and Virtualisation platform like VMware which is tried and tested and not of the new generation of cloud developed software.

Another thing with this news story is the sheer lack of backup and recovery activity that seemed to be on offer and used to restore customer workloads, again this along with less stringently imposed SLA's are what initially makes Cloud cost look so appealing on the figures and balance sheet, something that many C levels certainly are likely to be attracted to in Cloud computing. Before investigating the feasibility of the cloud it maybe wise to ensure that typical belt and braces activity such as backup and recovery which is currently defacto in any datacentre is part of your service or even performed to another cloud provider such as Amazon S3, if backup isn't an available option think very hard about committing and running your business on what is effectively a ticking timebomb.

Hopefully this provided a brief outlook on Cloud and any possible insecurities that may exist to any current early adopters and my condolences go out to anyone related to the poor guy that took his life.

More on the talking tech side and this event really is a stark warning of the potential destruction that a self service provided administrative interface for "cloud" services can wreak to end users, this particular provider was easily exploited due to technology insecurities in the HyperVM product giving exploiters extended full root access to delete practically anything public facing.

Ok so this hyperVM app was insecure but how many other shops enable root because they are lazy? Who remembers when we used to have root enabled by default in ESX 2.x???? I wonder how many other apps that are developed in what is effectively still the early adopter era for Cloud are being developed with very little security governance and certified hardening process (I'm not a developer so excuse the possible lack of knowledge here).

This news piece has also provided a warning that public Cloud services and the current ecosystem of management interfaces in its current bleeding edge form is still very raw and rough around the edges, it certainly highlights cloud services are susceptible to destruction on this scale by the security flaws possibly found in any interfaces that manage "cloud" datacentres.

I guess the question is would this type of exploit occured in a Datacentre which was physically secured and more conventional to today i.e. a Private Cloud? I think not, the security model is more aligned to current conventional security policies, you are not putting security in the hands of your service provider as much and you are most likely using a proprietary management interface and Virtualisation platform like VMware which is tried and tested and not of the new generation of cloud developed software.

Another thing with this news story is the sheer lack of backup and recovery activity that seemed to be on offer and used to restore customer workloads, again this along with less stringently imposed SLA's are what initially makes Cloud cost look so appealing on the figures and balance sheet, something that many C levels certainly are likely to be attracted to in Cloud computing. Before investigating the feasibility of the cloud it maybe wise to ensure that typical belt and braces activity such as backup and recovery which is currently defacto in any datacentre is part of your service or even performed to another cloud provider such as Amazon S3, if backup isn't an available option think very hard about committing and running your business on what is effectively a ticking timebomb.

Hopefully this provided a brief outlook on Cloud and any possible insecurities that may exist to any current early adopters and my condolences go out to anyone related to the poor guy that took his life.

Monday, 8 June 2009

New Poll - Blueprinting before you upgrade to vSphere

After seeing a few "minor" issues people within the VM community and Blogosphere are getting with vSphere 4 upgrades I have built a poll to see who is going through Blueprint and UAT testing processes before performing an upgrade.

I guess if you have done a blueprint you are very efficient and have a good working relationship with Vmware to be on beta programmes etc.

Will be interested to see the results!

I guess if you have done a blueprint you are very efficient and have a good working relationship with Vmware to be on beta programmes etc.

Will be interested to see the results!

Sunday, 7 June 2009

Cisco UCS C Series - Some answered questions

For those unaware this week Cisco has announced rackmount servers to complement the UCS family http://www.datacenterknowledge.com/archives/2009/06/03/cisco-unveils-rackmount-servers-for-ucs/.

Way back in March on this VMlover blog post http://vmlover.blogspot.com/2009/03/ucs-again.html I raised a few opinions and views on UCS and questions around the possible future of the overall hardware offering, surprisingly Cisco answered some of these directly in a direct feedback video (I nearly choked on my coffee when I found this out), the original post brought up some of these questions around whether Cisco would release a Rackmount solution along with Blade to provide customers that do not use Blade today with server alternatives within the Cisco range and lo and behold now it appears the questions have been asked by a hardware server release of C Series Rackmount servers in UCS!

Cisco's strategy for entering the datacenter world is exceptionally aggressive and certainly risky in the middle of an economic downturn, I give them ultimate credit for this. Now Cisco have the full server model portfolio I provide some more thoughts and questions on Cisco's Datacenter 3.0 strategy;

Will UCS be fully price competitive against vendors like HP/IBM/Dell or will it simply be of the same price point and highlight to customers more about indirect cost benefit savings achieved through reductions with Unified Connectivity and enchanced agility with "free" centralised management tools?

With Cisco having mostly large datacenter coverage with Networking and Internet connectivity I suspect that they will be competitive to a large degree. I'm not talking Dell drop your pants material here but I do think they will bite at HP/IBM offerings and market customers with aggressive sales.

Cisco will most likely want to perform TCO/ROI exercises to "work with your organisation" they do this quite successfully on product sets today and why not as long as they can show you that it has cost saving potential, it it dosnt I think they know where they are heading with customers, this is commodity remember.

Will the provided UCS management tools extend to cover Rackmounts?

I suspect that we may see point management of server builds and deployments in Rackmount and we may see FcOE provisioning and zoning policys being defined via UCS manager as with the B Series boxes.

Most alternative blade manufacturers provide the management stack built in at host level with examples being HP SIM/Altiris offerings, so UCS is not new to this arena. But what UCS does have that is different on B Series Blades is a component which resides on a Fabric extender module called CMC (Chassis Management Switch), although on observation it looks to work in similar fashion to iLO on HP Blades but extends further down the stack to perform activity such as manage physical hardware components, zone storage, apply QOS networking policy on each physical UCS blade and many more.

It will be real cool if Rackmounts also have this central operational control and management policy driven environment all being provided through the onboard CMC across unified fabric, it will truly add to the value add proposition of reducing operation cost without purchasing additional tools and networking infrastructure to run it. This could even be the start of the truly lights out datacenter! God UCS really is the x86 Mainframe when you look at its potential.

Could C series Rackmount be more popular than the B Series blade?

With Rackmount being the grandfather of commodity computing in datacenters this could happen. Some organisations select Rackmount servers because it reduces risk and it is within their comfort zone, It will be interesting to see how sales figures look between the two.

Turn this statement on its head though and Networking folk have used Blade in telecommunication for yonks, the 6500 Series data switching is fully bladed and offers BIG cost benefits with overall consolidated footprint and management, also MDS SAN Connectivity is chassis based. With Nexus being blade based this could mean Blade sales are more predominant with UCS. I personally think Blade is the way to go, its consolidation at the highest level which drives efficiency in your datacenter.

Rackmount likely to provide more dense memory?

Most certainly yes, architecturally rackmount tin has more space to put the RAM, also with the new Cisco UCS extended memory capability's this offers larger opportunity to exploit the custom "Catalina" ASIC which provides more Memory capacity within UCS than competitors.

You could argue is 384GB of Memory in a Blade enough though! However I'm sure we said this about 4GB of memory pre x64 bit era.

When do Cisco buy the storage company or at least OEM storage?

I'll maybe let the future do the talking on this one...I can see it happening and just wanted to give me opportunity to go back and revisit unanswered topics in months to come :)

Summary

Overall the more I read about UCS and its in inbuilt technological benefits the more I really want to see it in action and being deployed to see what results can be achieved both technically and operationally. I believe Cisco is releasing UCS this quarter so will be extremely interested to hear on the grapevine and blogosphere who is deploying UCS.

Way back in March on this VMlover blog post http://vmlover.blogspot.com/2009/03/ucs-again.html I raised a few opinions and views on UCS and questions around the possible future of the overall hardware offering, surprisingly Cisco answered some of these directly in a direct feedback video (I nearly choked on my coffee when I found this out), the original post brought up some of these questions around whether Cisco would release a Rackmount solution along with Blade to provide customers that do not use Blade today with server alternatives within the Cisco range and lo and behold now it appears the questions have been asked by a hardware server release of C Series Rackmount servers in UCS!

Cisco's strategy for entering the datacenter world is exceptionally aggressive and certainly risky in the middle of an economic downturn, I give them ultimate credit for this. Now Cisco have the full server model portfolio I provide some more thoughts and questions on Cisco's Datacenter 3.0 strategy;

Will UCS be fully price competitive against vendors like HP/IBM/Dell or will it simply be of the same price point and highlight to customers more about indirect cost benefit savings achieved through reductions with Unified Connectivity and enchanced agility with "free" centralised management tools?

With Cisco having mostly large datacenter coverage with Networking and Internet connectivity I suspect that they will be competitive to a large degree. I'm not talking Dell drop your pants material here but I do think they will bite at HP/IBM offerings and market customers with aggressive sales.

Cisco will most likely want to perform TCO/ROI exercises to "work with your organisation" they do this quite successfully on product sets today and why not as long as they can show you that it has cost saving potential, it it dosnt I think they know where they are heading with customers, this is commodity remember.

Will the provided UCS management tools extend to cover Rackmounts?

I suspect that we may see point management of server builds and deployments in Rackmount and we may see FcOE provisioning and zoning policys being defined via UCS manager as with the B Series boxes.

Most alternative blade manufacturers provide the management stack built in at host level with examples being HP SIM/Altiris offerings, so UCS is not new to this arena. But what UCS does have that is different on B Series Blades is a component which resides on a Fabric extender module called CMC (Chassis Management Switch), although on observation it looks to work in similar fashion to iLO on HP Blades but extends further down the stack to perform activity such as manage physical hardware components, zone storage, apply QOS networking policy on each physical UCS blade and many more.

It will be real cool if Rackmounts also have this central operational control and management policy driven environment all being provided through the onboard CMC across unified fabric, it will truly add to the value add proposition of reducing operation cost without purchasing additional tools and networking infrastructure to run it. This could even be the start of the truly lights out datacenter! God UCS really is the x86 Mainframe when you look at its potential.

Could C series Rackmount be more popular than the B Series blade?

With Rackmount being the grandfather of commodity computing in datacenters this could happen. Some organisations select Rackmount servers because it reduces risk and it is within their comfort zone, It will be interesting to see how sales figures look between the two.

Turn this statement on its head though and Networking folk have used Blade in telecommunication for yonks, the 6500 Series data switching is fully bladed and offers BIG cost benefits with overall consolidated footprint and management, also MDS SAN Connectivity is chassis based. With Nexus being blade based this could mean Blade sales are more predominant with UCS. I personally think Blade is the way to go, its consolidation at the highest level which drives efficiency in your datacenter.

Rackmount likely to provide more dense memory?

Most certainly yes, architecturally rackmount tin has more space to put the RAM, also with the new Cisco UCS extended memory capability's this offers larger opportunity to exploit the custom "Catalina" ASIC which provides more Memory capacity within UCS than competitors.

You could argue is 384GB of Memory in a Blade enough though! However I'm sure we said this about 4GB of memory pre x64 bit era.

When do Cisco buy the storage company or at least OEM storage?

I'll maybe let the future do the talking on this one...I can see it happening and just wanted to give me opportunity to go back and revisit unanswered topics in months to come :)

Summary

Overall the more I read about UCS and its in inbuilt technological benefits the more I really want to see it in action and being deployed to see what results can be achieved both technically and operationally. I believe Cisco is releasing UCS this quarter so will be extremely interested to hear on the grapevine and blogosphere who is deploying UCS.

Subscribe to Comments [Atom]